Fox Renderfarm Blog

Render Farm Blog

What is Jibaro? Reveal the Story and Techniques Behind the Production of Jibaro "Love, Death & Robots"

Behind The Scenes

One of the episodes of Netflix's series Love, Death & Robots, Jibaro, has brought about a great response. In this article, follow Fox Renderfarm, the best cloud rendering service provider, to learn the story behind Jibaro.

Virtual Production:Past, Present, and Future (2)

Behind The Scenes

Virtual Production is an emerging film and TV production system, including a variety of computer-aided film production methods designed to enhance innovation and time-saving. With the help of real-time software such as Unreal Engine.

3D Tutorials: How to Make Dogs in Togo (1)

Behind The Scenes

Togo is a very goog filme which produced by Walt Disney Pictures. Here as the lead cloud rendering service provider, Fox Renderfarm, is willing to tell you how to make a dogs like dogs in Togo.

3D Tutorials: How to Make Dogs in Togo (2)

VFX

Togo is a very goog filme which produced by Walt Disney Pictures. Here as the lead cloud rendering service provider, Fox Renderfarm, is willing to tell you how to make a dogs like dogs in Togo.

3D Tutorials: How to Make Dogs in Togo (3)

VFX

Togo is a very goog filme which produced by Walt Disney Pictures. Here as the lead cloud rendering service provider, Fox Renderfarm, is willing to tell you how to make a dogs like dogs in Togo.

Virtual Production:Past, Present, and Future (3)

Behind The Scenes

Virtual Production is an emerging film and TV production system, including a variety of computer-aided film production methods designed to enhance innovation and time-saving. With the help of real-time software such as the Unreal Engine.

Virtual Production:Past, Present, and Future (1)

Behind The Scenes

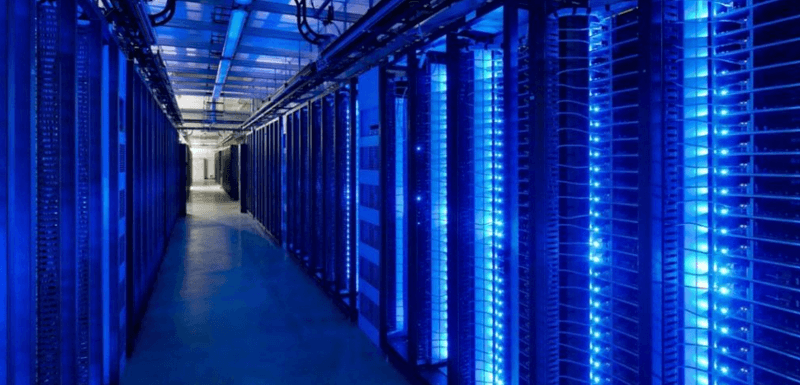

Virtual Production is an emerging film and TV production system, including a variety of computer-aided film production methods designed to enhance innovation and time-saving.

How does the studio directly produce the final shot? (2)

Behind The Scenes

How does the studio directly produce the final shot? The production team of Disney's new drama The Mandalorian used the real-time rendering technology to construct the digital virtual scene in advance.

Behind the Scenes: Spy House Production (3)

Behind The Scenes

This is a sharing of making a spy house with 3ds Max, provided by your TPN-Accredited 3ds Max render farm. The main software used in this article is ZBrush, Marmoset Toolbag 3. The following is the final effect display, then share the overall production process.

Behind the Scenes: Spy House Production (2)

Behind The Scenes

This is a sharing of making a spy house with 3ds Max, provided by your TPN-Accredited 3ds Max render farm. The main software used in this article is ZBrush, Marmoset Toolbag 3. The following is the final effect display, then share the overall production process.

White Snake’s Special Effects Behind The Scenes And Animated Performances

White Snake

We will start to reveal the White Snake's special effects and animation performance behind the scenes.